Guest post by Christopher Gelpi

The folks at the TRIP project at William and Mary have begun fielding a series of “snap polls” that ask international relations scholars for their opinions on policy issues of the day. The results of the first snap poll were recently released at Foreign Policy, and the TRIP project has been very kind in sharing their data in an effort to make them more useful. Idean Salehyan gave us a great overview of the goals and potential contributions of this project, and Erik Voeten also provided a nice analysis of which (lucky few!) scholars correctly predicted Russian intervention in Ukraine.

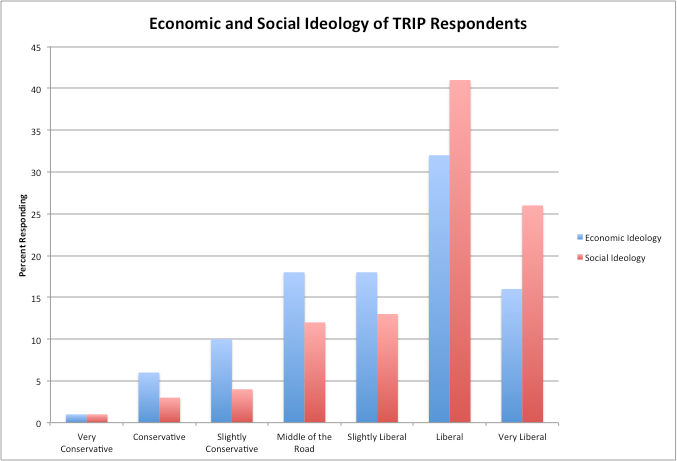

Another important question that can be addressed by these data is the extent to which the policy preferences and predictions of international relations scholars are related to their political ideology as opposed to their professional expertise. Previous TRIP surveys asked respondents to place their social and economic ideologies on a seven-point scale ranging from “Very Conservative” to “Very Liberal,” and the TRIP team was able to match those responses to the respondents in the recent TRIP snap poll.

Not surprisingly, the respondents to the recent TRIP snap poll were fairly liberal in both their social and economic ideologies. Nearly 46% of the respondents described themselves as “Liberal” or “Very Liberal” on economic issues, and 67% identified themselves as socially “Liberal” or “Very Liberal.” Only 7% of TRIP respondents identified as economically conservative, and only 4% as socially conservative. According to a recent Gallup poll, about 23% of the American public identify as politically liberal, while about 38% identify as conservative. So the TRIP survey confirms the widely held perception that academics (or at least International Relations scholars) are liberal.

But how much are our policy views influenced by our ideologies as opposed to our professional expertise? In order to investigate this issue, I examined the impact of economic and social ideology on several of the TRIP responses. I began by looking at the questions about policy preferences, such as attitudes about whether the US is spending too much money on the military. We should not be too surprised (or concerned) if ideology is related to these kinds of responses, because they relate more closely to political preferences rather than professional expertise. Scholars have the same right to an opinion on the defense budget as anyone else, and there is no reason to believe that professional training can tell you what the level of defense spending “should” be.

Next, however, I turned my attention to questions that asked scholars to make predictions about the future behavior of other nations. In these cases we would hope that scholarly expectations about future events (such as conflict in Ukraine or Syria) should be influenced by professional factors such as country expertise, methodological or theoretical training, and so on. A link between domestic political ideology and such predictions might raise concerns about scholarly objectivity.

I estimated the impact of economic and social ideology on the probability that TRIP respondents would state that the US spends too much on the military, that Russia would intervene militarily in Ukraine, and that Syria will eventually comply with its obligation to destroy its chemical weapons. In each analysis I included statistical controls for the respondent’s methodological approach, substantive or geographic area expertise, and theoretical orientation. I also included a control variable for scholars from the top 25 ranked departments.

The colored dots in the figure represent the effect of economic and social ideology on responses to each of the questions. The green dots represent the responses to the defense-spending question. The red dots represent the responses to the predictions about Ukraine, and the blue dots represent the predictions about Syria. The vertical black bars represent the uncertainty surrounding these statistical estimates. If the vertical bars do not cross zero, then the estimated effect of ideology is statistically significantly different from zero.

Not surprisingly, we see that both economic and social ideology are strongly associated with attitudes about defense spending. The green dots indicate that an increase of one point on the seven-point liberalism scale increases the probability that a respondent will say that we spend too much on the military by about 4%. Since most of the respondents are somewhere between “middle of the road” and “very liberal” this means that changes in economic and social liberalism may have an impact as large as 15-20% on the likelihood that an IR scholar will say that we spend too much on the military.

However, the red dots indicate that ideology had little or no impact on scholars’ beliefs about whether Russia would intervene militarily in Ukraine. The estimated effects for ideology are around 1% or less, and these effects are not statistically significant. Consistent with Erik Voeten’s results, I find that factors such as theoretical orientation and substantive expertise mattered much more in predicting Russian action.

When it comes to Syria, on the other hand, ideology seems to have crept back into scholars’ calculations. The blue dots indicate that the impact of ideology on scholar’s predictions about Syrian compliance is very similar to its impact on attitudes about defense spending. Thus changing a scholar’s ideology from “middle of the road” to “very liberal” might change the likelihood that they would predict Syrian compliance by nearly 20%.

So what makes the difference between Ukraine and Syria? Why were IR scholars politically neutral in one case and ideologically influenced in the other? I think one possible explanation is the differing level of politicization of these two issues. Prior to Russian intervention, Ukraine was not on the American political agenda and was not a partisan issue. Thus when scholars were asked about intervention there was no reason for them to formulate their response with regard to a partisan debate. Syria, on the other hand, has been a partisan issue for some time, and Republicans have sharply criticized President Obama’s decision to engage in diplomacy as dangerously naïve. In the midst of such a partisan contest, scholarly predictions about the likely behavior of Syria may take on a political tone.

As scholars and as citizens we all have an obligation to engage in the public arena, and instruments like the TRIP snap polls help us to remain engaged. However, the responses to the recent TRIP snap poll also remind us of the difficulty of maintaining scholarly objectivity in the midst of a polarized environment. Journalists and policy makers will often seek scholarly opinions on issues that are hotly contested along party lines. It is in precisely these circumstances when we need to be most careful and self-conscious about separating professional expertise from political opinion.

Christopher Gelpi is Chair of Peace Studies and Conflict Resolution at the Mershon Center for International Security and Professor of Political Science at The Ohio State University.

0 comments

Hi Chris, thanks for beating on this data a bit. I probably should have asked you before you posted this, but wondered if you are interested in looking at one more thing.

I think your analysis is interesting and your guess on politicization makes a lot of sense. Also think your suggestion that we use these data with eyes wide open makes sense. We need more of this to understand the conditions under which the wisdom of an “expert” crowd is more/less useful.

While you can’t do it with every question (because we don’t have public opinion data for all questions), I am interested in the residual “epistemic effect” after we control for ideology. Are conservative members of the public indistinguishable from conservative members of the academy? Are moderate members of the public indistinguishable from moderate members of the academy? Etc… If there is no residual epistemic effect of training and expertise, and ideology explains all the variance, then we really should be skeptical of “expert opinion” once an issue gets politicized. Many of the issues we will ask questions about will, in fact, be politicized. In this case I think you could do it for the defense budget question because Pew is good about sharing data. I don’t think we have public opinion data on the Syria or Ukraine questions.

In an ideal world we would have a survey of IR scholars in the field at the same time we have a survey of the public. Then we could compare answers and control for ideology (and other things) in an effort to identify the conditions under which expertise explains variation.

We tried to do something like this with imperfect data on support for and effects of the Iraq War, but would be better to design and execute ex ante.

Thanks again and looking forward to continuing this conversation.

Scholarly objectivity is not difficult to maintain. It is impossible to maintain. More than that, it does not exist, and we would all would be better of if everybody stopped pretending that there is such a thing. Scholars are as biased as everybody else. When they express opinions, what should be scrutinized is the logic of their arguments and the quality of the evidence they marshal in defense of those arguments. If they claim to be objective, that should arouse justifiable suspicion.