By Aila M. Matanock and Miguel García-Sánchez.

Knowing when civilians are likely to side with counterinsurgents has been a crucial component in the US’s involvement in the wars in Afghanistan, Iraq, and Syria. A common policy prescription in counterinsurgency campaigns has been to win social support in order to produce a victory on the battlefield. Most recently, many recommendations and predictions on how to defeat ISIS in the Middle East focus on winning hearts and minds.

Distinguishing combatants supportive of counterinsurgents is central to estimating chances for success in combat, but doing so can be difficult. That is because people often misrepresent who they are likely to support when asked. Public opinion surveys routinely show consistently strong support for the counterinsurgents. Puzzlingly, these reported rates seem to remain strong and consistent even during long-standing contentious campaigns and even in contexts in which the counterinsurgents have had little success against insurgents. In Colombia, one of the longest standing counterinsurgencies spanning more than 50 years, the Americas Barometer-Latin America Public Opinion Project (LAPOP) survey has found that individuals claim that they have high levels of trust over time – at almost 70 percent and with low variance – in the forces, such as the military, fighting the insurgency (see Figure 1 below).

This consistently strong support for the military, reported by citizens in Colombia across these surveys, persists despite the periodic setbacks in the counterinsurgency and despite the human rights scandals that have come to light during the campaign. It even holds within surveys across guerrilla-controlled and coca producing regions, where the military has fared the worst, presumably due in part to a lack of cooperation from the population.

Figure 1: Survey Response – Trust in the Colombian Military (Matanock and García-Sánchez 2016)

Are people not reporting truthfully? And if so, how can we determine who they really support?

We suggest that individuals have incentives to falsify their reported rate of support when states try to measure social support among the population, but that their true rate of support can be measured. Certain types of survey questions offer indirect methods of expressing support, conceal individuals’ responses, and reduce social pressure and fear. This allows individuals to deviate from standard answers when they wish and to, potentially, be more truthful.

We conducted a survey experiment with randomized assignment of direct versus indirect questions to respondents about support for the military. The 1,897 face-to-face interviews were conducted in Colombia – an ongoing insurgency with variation in state reach – in May 2010. The survey was identified as having had funding from the US government, a Colombian state ally, thus adding to the sense that direct questions may require the standard answer in order to avoid social sanctioning or punishment.

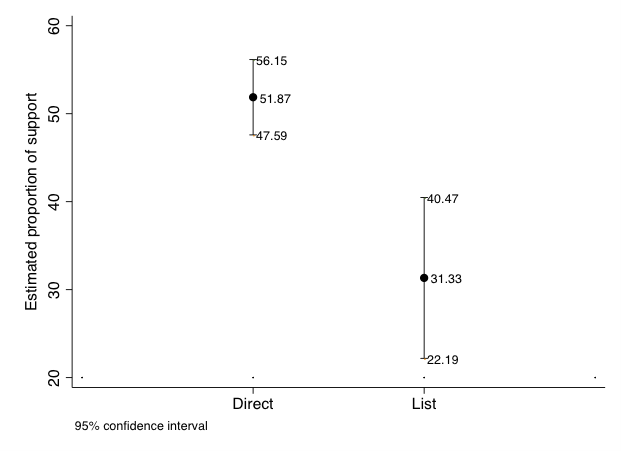

Respondents reported lower levels of support for the military in the indirect measures than in the direct measures across all contexts (Figure 2). Support for the military is 52.8 percent when measured using the direct question, and 31.3 percent when measured using the list experiment.

Figure 2: Estimated Proportion of Support for the Military – Direct Versus Indirect Measures (Matanock and García-Sánchez 2016)

And the difference is largest in municipalities with guerrilla control and coca production. Individuals who rely on insurgents to protect them and who engage in illicit activities may be especially unsupportive of counterinsurgents but also especially hesitant to reveal that information in surveys tied to the state, suspecting that counterinsurgents may be able to use it to negatively impact their lives or their livelihoods. In guerrilla-controlled municipalities, support reported for the military is 40 to 50 percent lower in the indirect measure compared to the direct measure (as opposed to just over 20 percent overall), and in municipalities with coca cultivation support reported for the military is over 30 percent lower in the indirect measure compared to the direct measure. In these areas, individuals may support the military less because it does not protect or provide for them, but they may fear punishment from the state for saying so – at least without guaranteeing that individuals’ responses are concealed through indirect questions.

All of this seems obvious. Surveys conducted in conflict zones that ask sensitive questions should include list experiments that allow for more truthful answers. But the reality is that many states and nongovernmental organizations, working to secure and rebuild during insurgencies, continue to ask direct questions of individuals in order to gauge support. They are then using the results of these surveys to claim that the population is “on their side.”

Governments and other organizations conduct numerous surveys in conflict zones seeking to determine which side the public supports. They then rely on the results of these surveys to determine counter-insurgency strategy, despite the fact that many of these surveys may not reveal that information. Preferences may be detectable before “surprising” outcomes take place. Scholars have figured out ways to design surveys to elicit more truthful responses. Our work in Colombia shows one way this could be done, using indirect questions, which allow individuals to remain more anonymous in their responses. Another method is to show subtle support for the nonstandard answer. An example of that is in Nicaragua: most of the polling suggested the former rebel leader who had taken control of the state would win the 1990 election, except a poll that used a pen with the opposition party’s initials on it, which came closest to predicting the upset in the elections. We posit that these methods may reduce incentives to default to standard answers, allowing us to better study social support in challenging – but critical – environments, including counterinsurgency contexts.

Aila M. Matanock is an Assistant Professor of Political Science at the University of California, Berkeley and a regular contributor at Political Violence @ a Glance. Miguel García-Sánchez is an Associate Professor of Political Science at the Universidad de los Andes in Bogotá. He is also Associate Director of Observatorio de la Democracia.